What Is the Usability Renewal Cycle?

Every professional device interface eventually drifts out of sync with the people who use it.

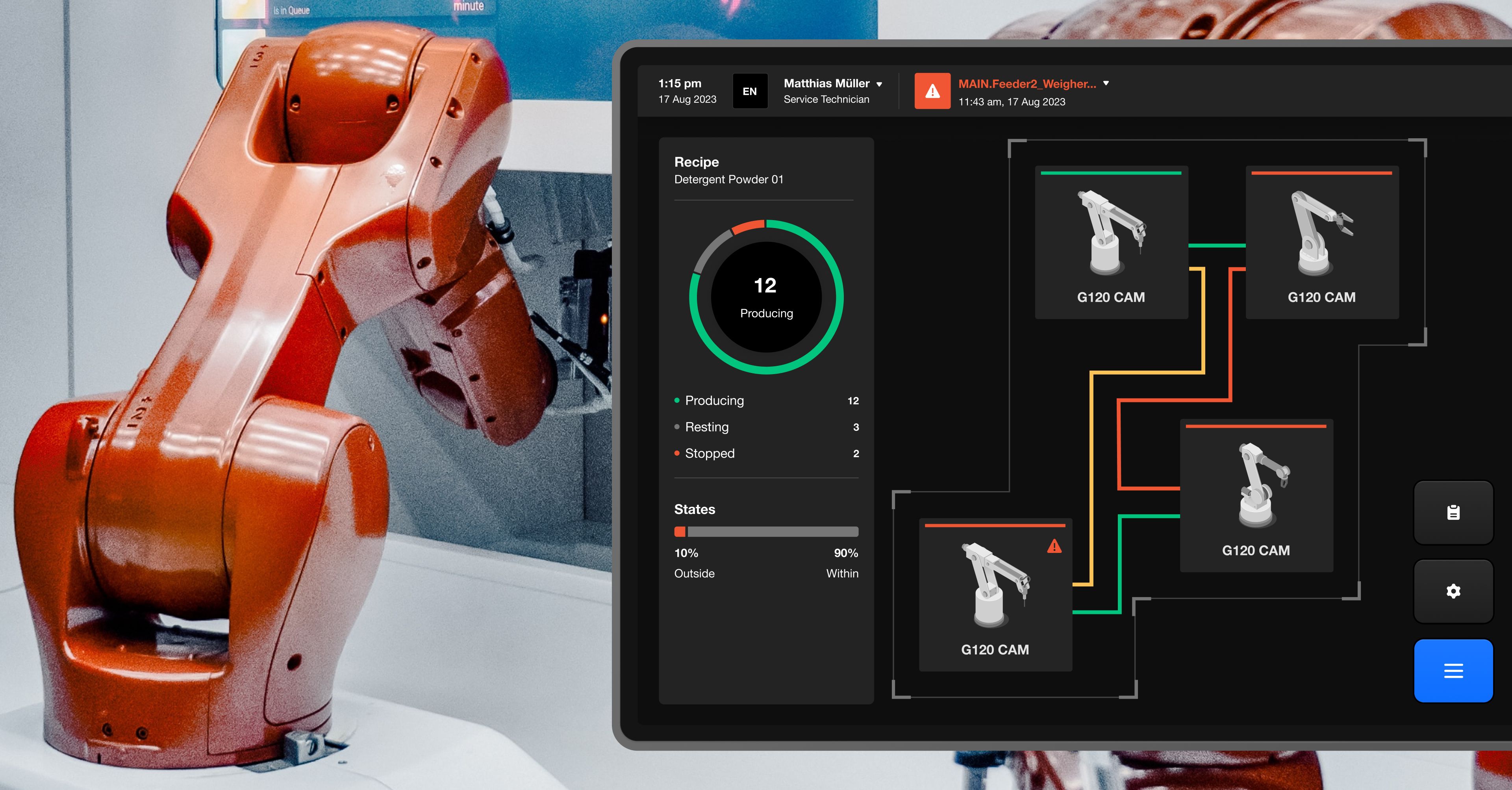

Operator using an industrial HMI touchscreen

Incremental updates, new functions, and shifting operator roles fragment what was once coherent. Industrial controllers, medical instruments, and laboratory tools all face the same problem: interface drift that increases cognitive load and operational friction.

Answer: The Usability Renewal Cycle treats redesign as a recurring, evidence-based process that restores alignment between human capabilities, device logic, and organisational goals. When usability metrics such as task time, error rate, or support volume degrade despite patches, a full renewal is needed. As defined in ISO 9241-11:2018 (usability definition) and ISO 9241-210:2019 (human-centred design), usability must be maintained iteratively. Classic human factors literature links such drift to workload and situation awareness limits (e.g., Endsley, 1995; Wickens, 2008).

Studies like Forrester TEI (2024) and the McKinsey Design Value Index (2023) confirm that structured redesign cycles lead to measurable business value: higher efficiency, faster onboarding, and shorter time to market.

Why Redesign Instead of Patch?

Answer: Patching fixes symptoms; redesign restores structure.

A professional GUI is more than a control surface, it is cognitive infrastructure that mediates perception and decision making between operators, engineers, and automation.

Operators in a process control environment, showing how professional device interfaces function as cognitive infrastructure that links humans, machines, and automation.

When that infrastructure weakens, performance and trust erode. Human error research shows how latent conditions accumulate across layers (Reason, 1990), and skills-rules-knowledge models explain performance shifts under stress (Rasmussen, 1983).

Redesign renews this infrastructure. Drawing on Norman’s usability principles and cognitive systems engineering (Vicente, 1999), it makes correct actions intuitive and prevents costly errors. It re-establishes shared understanding across roles, supporting situation awareness (Endsley, 1995) and workload balance (Hart & Staveland, 1988; Wickens, 2008).

The Usability Renewal Cycle formalises renewal as a structured, measurable process rooted in human factors engineering rather than cosmetic refresh.

How Does the Five-Phase Renewal Process Work?

1. Discovery and Baseline Assessment

Principle: Every redesign begins with direct evidence.

Field observation and contextual inquiry reveal where actual workflows diverge from documentation. Operators often develop workarounds that expose structural friction. Collect baseline metrics such as task duration, error frequency, and support volume to quantify degradation. Use recognised instruments where appropriate, such as SUS (Brooke, 1996; acceptability ranges: Bangor et al., 2009) and task-level workload (Hart & Staveland, 1988 — NASA-TLX).

In parallel, perform technical discovery: evaluate middleware, display hardware, and software constraints (e.g., Qt for MCUs, Crank Storyboard, TouchGFX). The outcome is a prioritised inventory of usability issues tied to measurable performance gaps. For control rooms or process industries, align observations with ISO 11064 (control-centre ergonomics) and alarm management guidance (e.g., EEMUA 191).

2. Requirements Definition and Compliance Alignment

Principle: Translate evidence into measurable usability goals.

Convert findings into testable criteria aligned with ISO 9241-210 and IEC 62366-1:2015/A1:2020. Conduct cross-functional workshops with engineering, UX, QA, and compliance to balance human factors with embedded constraints. Maintain a traceability matrix linking each requirement to evidence and validation. This enables auditable rationale and design history files (see IEC 62366 usability engineering file requirements).

3. Concept Development and Prototyping

Principle: Test structure before aesthetics.

Generate design hypotheses through alternative information architectures and interaction models. Mid-fidelity prototypes validate logic and hierarchy before visual detail introduces bias. Standardise reusable components into a design system following modular principles (Frost, 2016). Consider cognitive work analysis and abstraction hierarchies to keep functional purpose visible at every level (Vicente, 1999).

Parallel proofs of concept confirm feasibility within performance budgets, ensuring prototypes translate into viable implementations.

4. Evaluation and Iteration

Principle: Verification is the engine of renewal.

Usability testing confirms whether redesign hypotheses achieve measurable improvement. Apply formative testing early and summative validation near completion as defined in IEC 62366. Combine quantitative metrics (task time, error rates) with qualitative observations. Include both normal and edge scenarios (alarms, degraded modes, safety overrides) to ensure resilience. Use standardised measures where possible (e.g., SUS, NASA‑TLX; guidance in Sauro & Lewis, 2016; Tullis & Albert, 2013).

Each evaluation cycle refines the interface and strengthens compliance documentation. As a result, teams achieve measurable error reduction and faster learning curves.

5. Implementation, Validation, and Rollout

Principle: Implementation proves discipline.

Move validated designs into production within an architecture that maintains separation of concerns. Use embedded frameworks such as Qt for MCUs or Crank Storyboard to decouple presentation from logic. Regulatory validation includes summative usability tests and complete documentation in line with IEC 62366. Roll out in controlled phases, update manuals and training, and monitor adoption metrics for 6–12 months. A disciplined rollout prevents regression and provides data to demonstrate ROI.

How Can Governance Sustain the Renewal Cycle?

Answer: Usability maturity depends on institutionalising redesign.

Maintain a design system (component libraries, style guides, and interaction standards) to preserve coherence across generations. Assign ownership and governance to prevent the drift that once caused redesign to be necessary. In safety‑critical and mission‑critical environments, integrate resilience engineering concepts (Hollnagel, 2012).

Continuous feedback loops convert redesign into cyclical maintenance of clarity. Use analytics and operator interviews to detect recurring issues. Where viable, field telemetry validates usage objectively. Periodic usability audits keep interface behaviour aligned with evolving workflows. In mature organisations, governance embeds usability engineering into the quality‑management system itself (Kirwan, 1994).

How Does Renewal Translate Into ROI?

Answer: Renewal pays for itself through efficiency and risk reduction.

| Metric | Typical Improvement | Business Effect |

|---|---|---|

| Training time | ↓ 20–40% | Faster onboarding, lower cost |

| Task completion time | ↓ 15–30% | Higher throughput, less fatigue |

| Error rate | ↓ 30–50% | Reduced downtime and incidents |

| Support tickets | ↓ 25–40% | Lower support burden |

Reduced training and error costs shorten payback periods and improve cost predictability. Reusable components accelerate future development, and integrated usability engineering reduces regulatory friction. Market‑level evidence from Forrester TEI (2024) and McKinsey DVI (2023) is consistent with measurement practices recommended by Sauro & Lewis (2016) and Tullis & Albert (2013).

When presenting ROI, translate design results into executive language emphasising time, cost, and risk.

Reducing operator onboarding from three weeks to 90 minutes cut annual training expenditure by 35%.

Figure: Iterative Renewal Cycle aligned with ISO 9241‑210 and IEC 62366.

What Happens After Launch?

Answer: The cycle continues.

Deployment begins a new monitoring phase. Track key metrics for at least 12 months to ensure gains persist. Provide structured feedback channels for operators and support teams. Where permitted, telemetry such as interaction heat maps and error logs adds objective data.

Apply version control and regression testing to protect redesign integrity. Lessons learned feed back into the design system, ensuring each cycle strengthens the next. Therefore, the Usability Renewal Cycle becomes continuous learning through evidence, iteration and renewal.

How can the ROI of usability be maintained?

Answer: Renewal is not a project milestone; it is a cultural competency.

The iterative principles of ISO 9241-210 (understand context, specify requirements, design solutions, evaluate outcomes) extend beyond single releases. Professional device interfaces behave as living systems: technologies evolve, workflows shift, expectations rise. Governance, audits, and feedback sustain coherence across these changes.

When renewal becomes habitual, interface quality shifts from operational liability to strategic advantage, uniting human cognition, technical precision, and business performance in one sustainable cycle of improvement.

References

- ISO 9241-11:2018. Ergonomics of human-system interaction — Usability: Definitions and concepts. iso.org

- ISO 9241-210:2019. Ergonomics of human-system interaction — Human-centred design. iso.org

- IEC 62366-1:2015/A1:2020. Application of usability engineering to medical devices. iec.ch

- ISO 11064 (parts 1–7). Ergonomic design of control centres. iso.org

- EEMUA 191. Alarm systems: A guide to design, management and procurement. eemua.org

- Endsley, M. R. (1995). Toward a theory of situation awareness in dynamic systems. Human Factors, 37(1), 32–64. doi

- Wickens, C. D. (2008). Multiple resources and mental workload. Human Factors, 50(3), 449–455. doi

- Reason, J. (1990). Human Error. Cambridge University Press.

- Rasmussen, J. (1983). Skills, rules, and knowledge; signals, signs, and symbols. IEEE Transactions on Systems, Man, and Cybernetics, 13(3), 257–266.

- Vicente, K. J. (1999). Cognitive Work Analysis. Lawrence Erlbaum Associates.

- Hart, S. G., & Staveland, L. E. (1988). Development of NASA‑TLX. In Human Mental Workload. doi

- Brooke, J. (1996). SUS: A quick and dirty usability scale. In P. W. Jordan et al. (Eds.), Usability Evaluation in Industry.

- Bangor, A., Kortum, P., & Miller, J. (2009). Determining what SUS scores mean. JUS, 4(3). link

- Tullis, T., & Albert, W. (2013). Measuring the User Experience. Morgan Kaufmann.

- Sauro, J., & Lewis, J. R. (2016). Quantifying the User Experience. Morgan Kaufmann.

- Hollnagel, E. (2012). FRAM: The Functional Resonance Analysis Method. Ashgate.

- Norman, D. A. (2013). The Design of Everyday Things (Rev. ed.). Basic Books.

- Nielsen, J. (1994). Usability Engineering. Morgan Kaufmann.

- Frost, B. (2016). Atomic Design. link

- Forrester TEI (2024). Total Economic Impact studies. forrester.com

- McKinsey Design Value Index (2023). mckinsey.com

In this story

This guide introduces the Usability Renewal Cycle, a structured approach to redesigning the GUI of professional and embedded devices. It combines ISO 9241-210 and IEC 62366 with evidence-based methods grounded in human factors research to improve reliability, compliance, and ROI.